Create Your First Project

Start adding your projects to your portfolio. Click on "Manage Projects" to get started

QLoRA Fine-Tuned Qwen LLM: A Traditional Chinese Medical Assistant

Project type

Fine-tuning of LLMs

🧪 This project brings to life a Traditional Chinese Medicine (TCM) AI assistant, crafted to serve as a knowledgeable advisor, particularly for elderly people seeking guidance on TCM practices. It demonstrates the power of fine-tuning open-source Large Language Models (LLMs) on limited resources—specifically consumer-grade GPUs, rather than data center-grade hardware such as NVIDIA A100 or multi-GPU clusters.

🧪 By harnessing Kaggle notebooks, the project leverages Unsloth’s 4-bit quantization and QLoRA (Quantized Low-Rank Adaptation) for Parameter-Efficient Fine-Tuning (PEFT), enabling efficient training of Alibaba’s Qwen 2.5 models. The 7B model offers speed for resource-constrained setups, while the 14B model delivers richer performance with its larger parameter count.

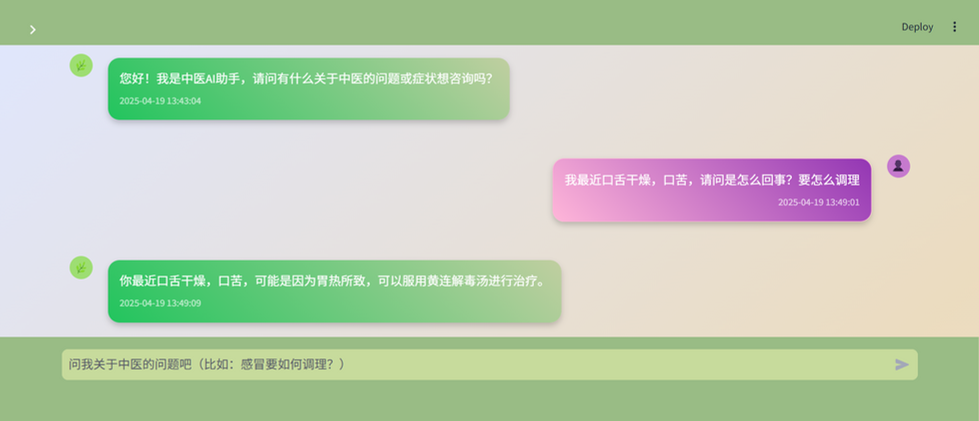

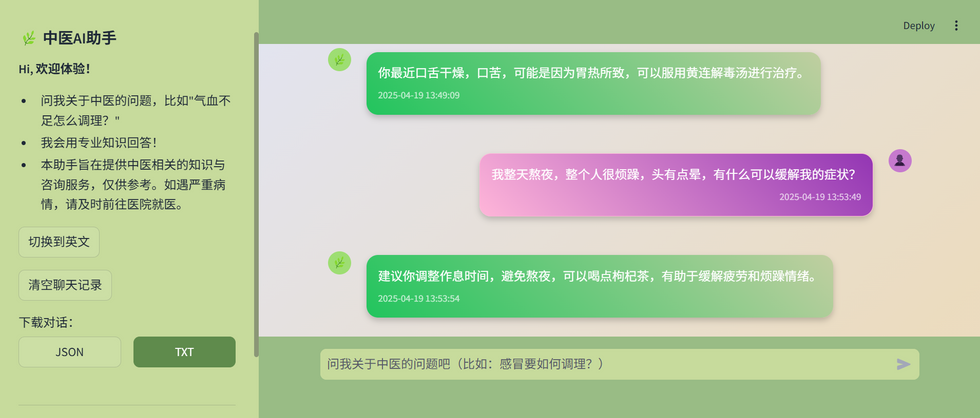

🧪 Tailored for the TCM domain, the assistant is trained on a Chinese-language TCM Q&A dataset (about 3 Millions records), with classical herb names and ancient terminology. This makes it excel in Mandarin, though it can handle English queries with slightly less precision due to the dataset’s Chinese focus. Qwen 2.5 was chosen over other LLMs, like Meta’s Llama 3.1 or Mistral AI’s Mistral series, for its superior Mandarin capabilities, ideal for capturing TCM’s nuanced knowledge. This approach highlights the potential of fine-tuning LLMs for specialized, non-English domains.

🧪 The technical approach emphasizes accessibility and efficiency. Unsloth’s quantization reduces memory demands, while QLoRA enables fine-tuning with minimal computational overhead. This setup empowers developers with limited resources to create domain-specific tools. The assistant bridges traditional wisdom with modern technology, offering users an intuitive way to explore TCM knowledge.

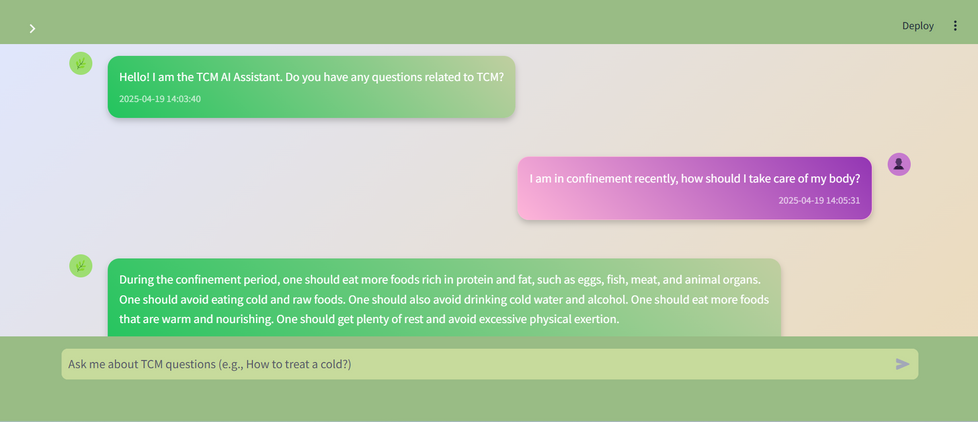

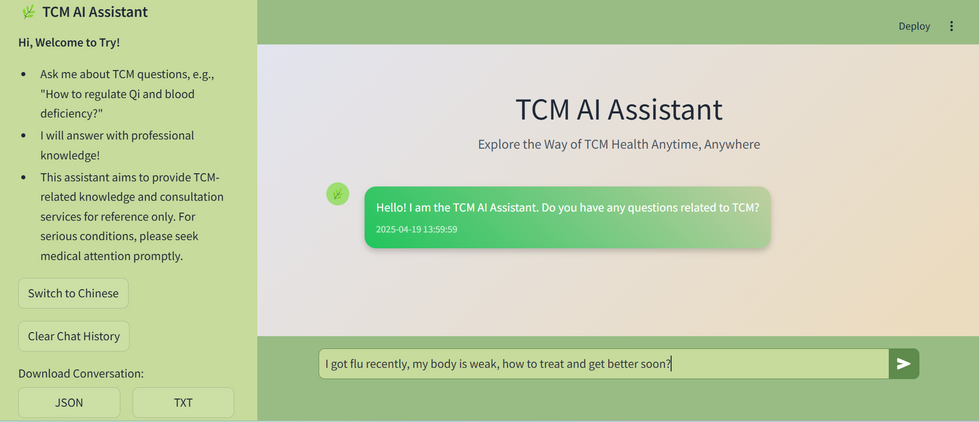

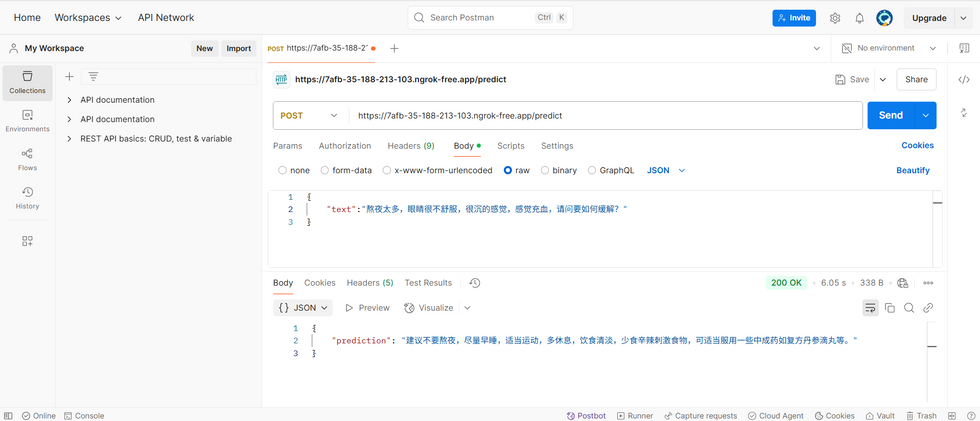

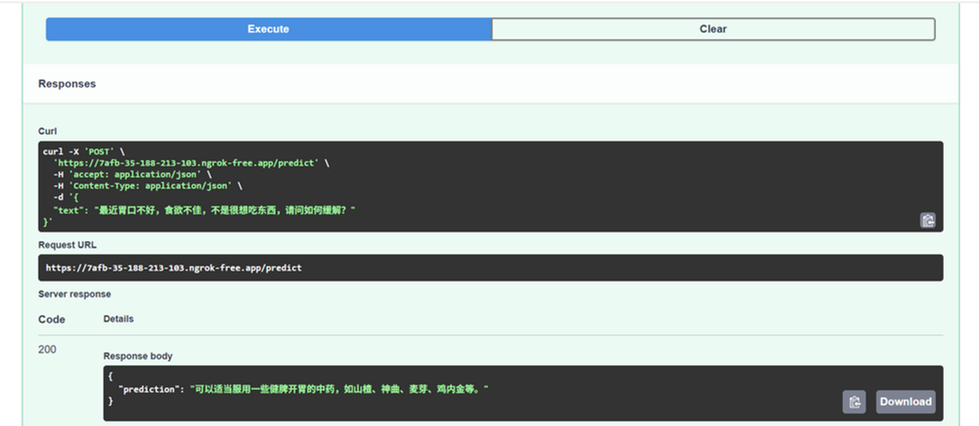

🧪 The chatbot is deployed as a web application using FastAPI, with Pyngrok for secure tunneling to make it publicly accessible. For visualization, an interactive front-end was developed using Streamlit, implementing session state to create a seamless conversational experience. The system was tested using FastAPI's Swagger UI and Postman to ensure robust API performance. This project demonstrates the ability to build, fine-tune, deploy, and visualize an end-to-end AI solution tailored to a specific domain.

Tech Stack:

- Python, FastAPI, Streamlit

- AI Stack: Qwen 2.5 series (Alibaba Open-source LLM), QLoRA, Unsloth

- Tools & Platform: Kaggle Notebook, Visual Studio Code, Hugging Face, Pyngrok

- API Testing: Postman, Swagger UI

- Technique: Parameter-Efficient Fine-Tuning (QLoRA), End-to-end LLM Deployment, Web-App Integration