Create Your First Project

Start adding your projects to your portfolio. Click on "Manage Projects" to get started

Facial Emotion Recognition with CNN & Attention Fine-Tuning

Project type

Kaggle Notebook, Deep Learning

🖼️ This project is a facial emotion recognition using EfficientNetB0, a Convolutional Neural Network (CNN), enhanced with Squeeze-and-Excitation (SE) attention blocks.

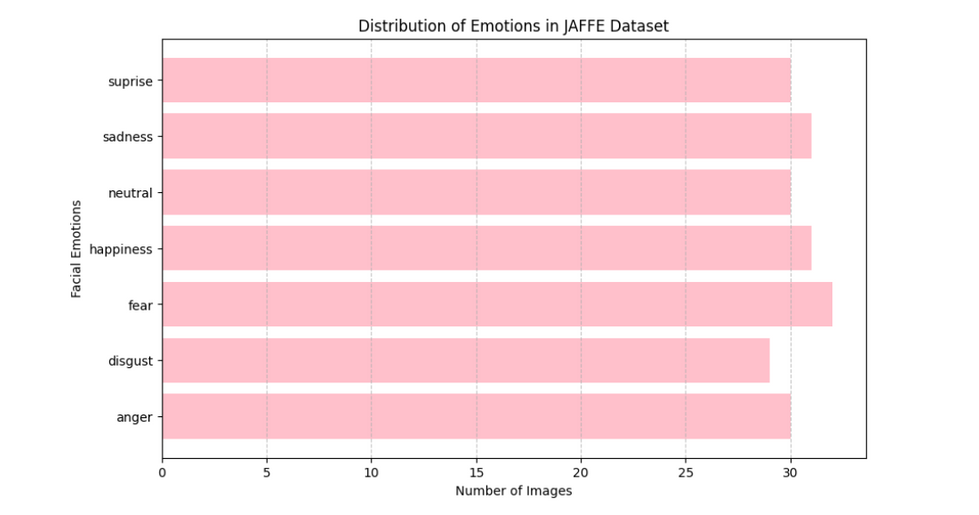

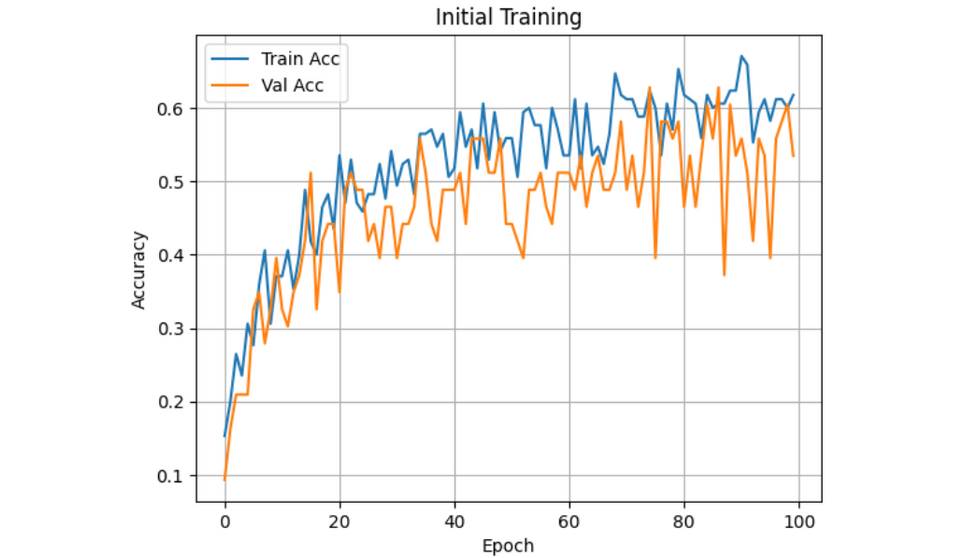

🖼️ Starting with a pretrained EfficientNetB0 model (ImageNet 1K), I performed transfer learning on the top layer for 100 epochs on the JAFFE (Japanese Female Facial Expression) dataset, achieving a baseline validation accuracy of 53.49%.

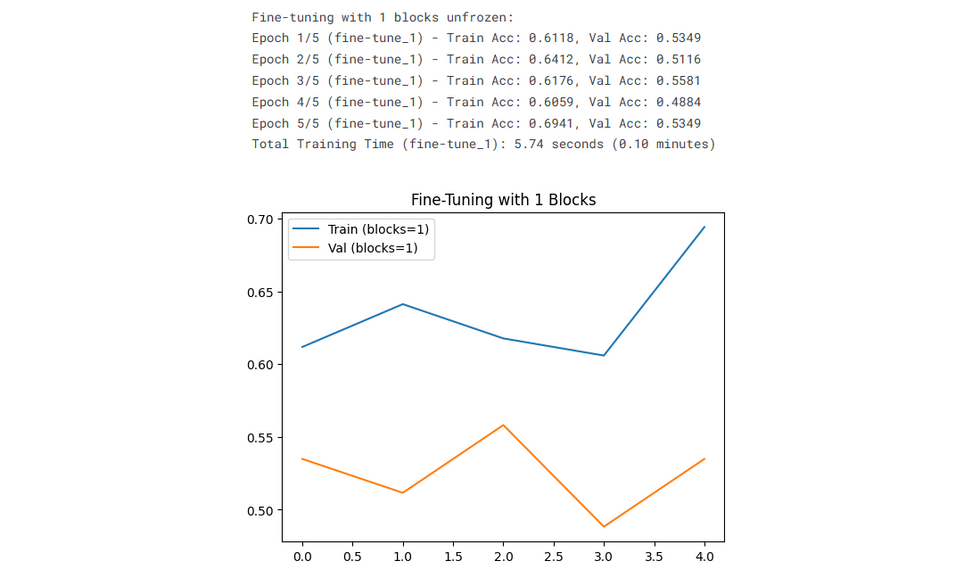

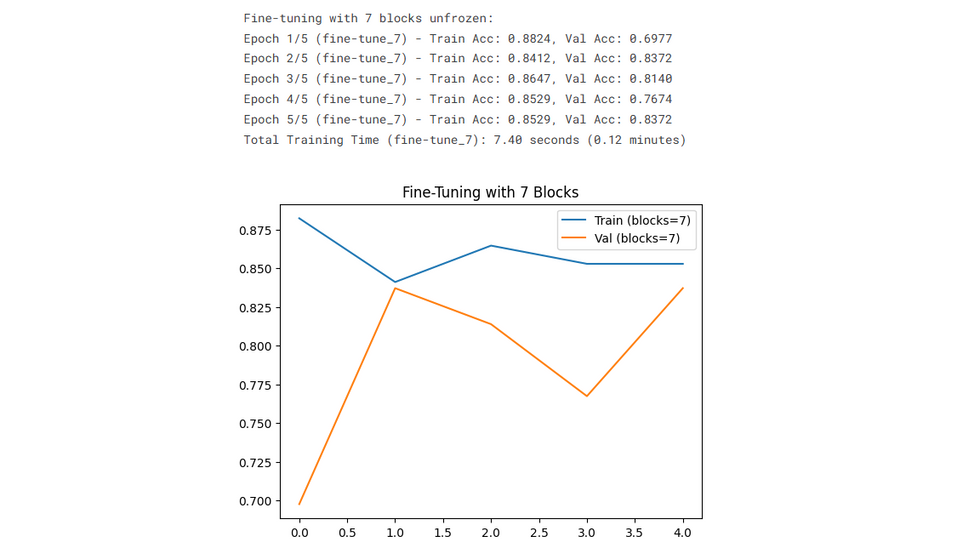

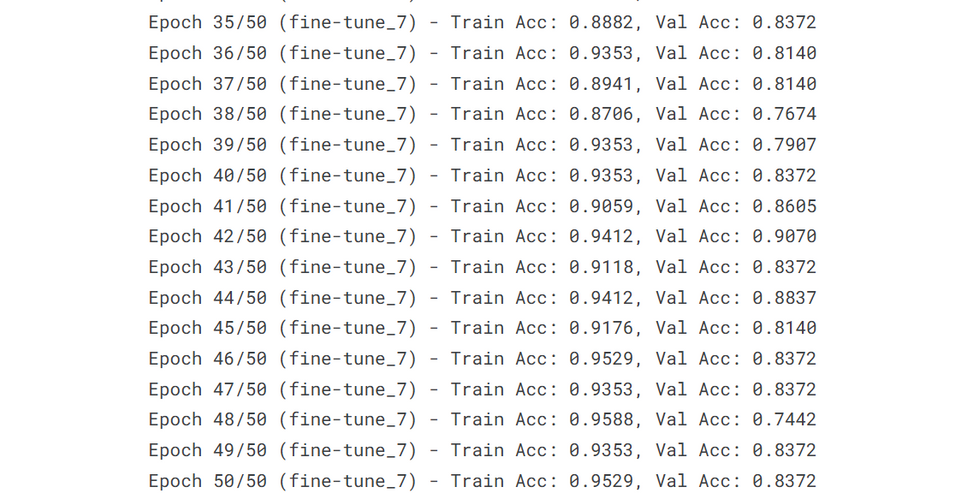

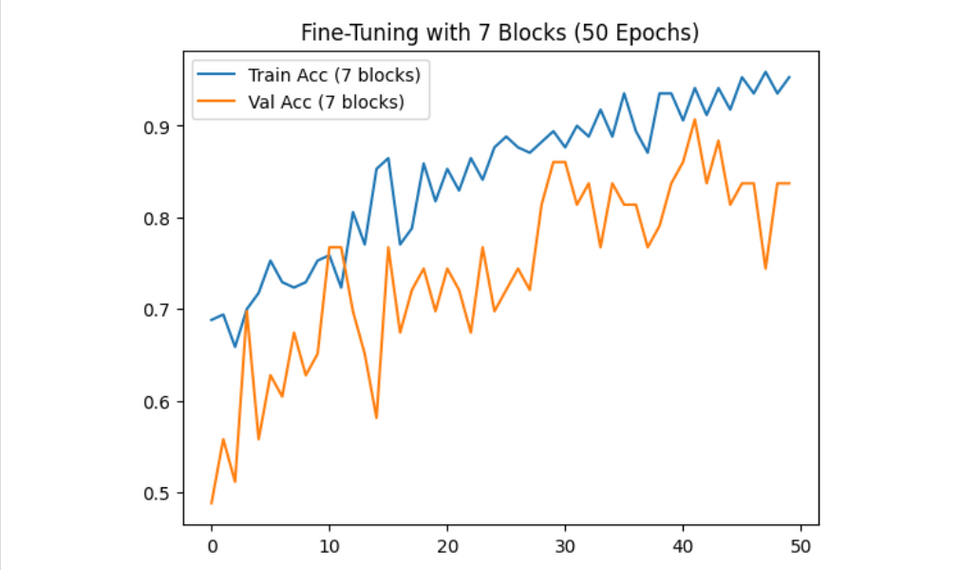

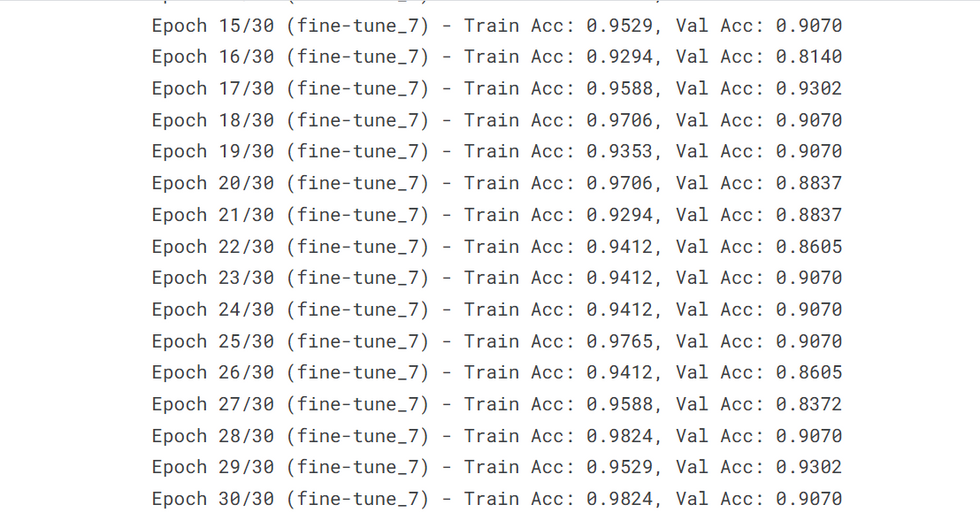

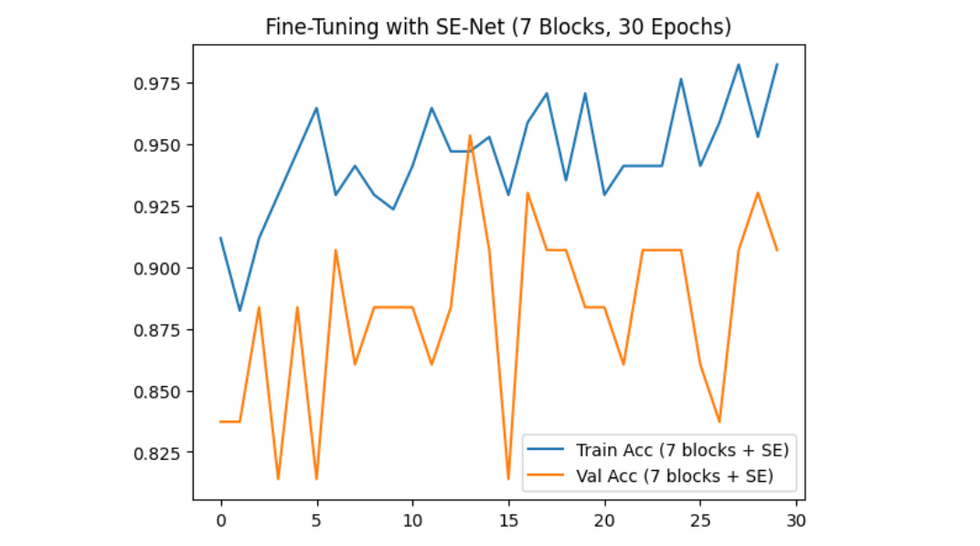

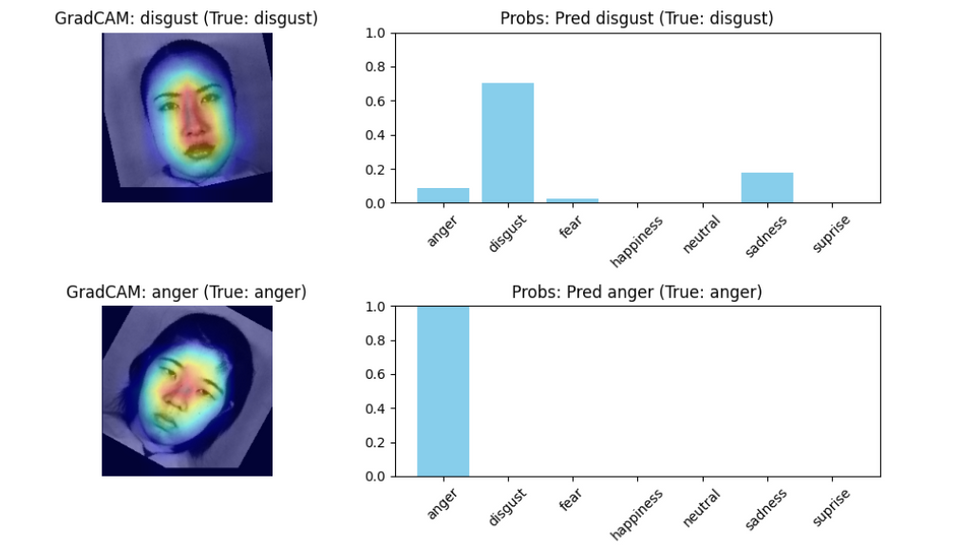

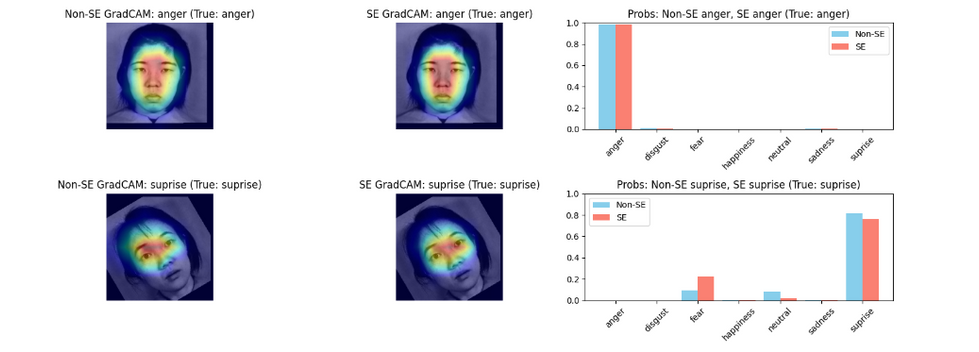

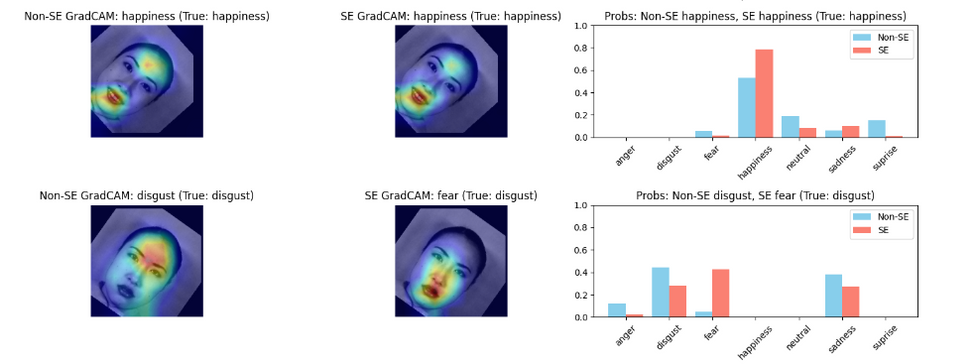

🖼️ Next, I unfroze 7 of 9 layers of the model backbone and fine-tuned for 50 epochs, boosting accuracy to 83.72%. Finally, integrating SE blocks through 30 epochs elevated the validation accuracy to 90.7%, with a training accuracy of 98.23%, showing non-overfitting (gap between training and validation accuracy <15%).

🖼️ Generally speaking, unfreezing only the top layer (output layer) is insufficient for robust classification. By unfreezing more layers, the model adapts better to the domain focus (facial emotions). Then, the apply of SE attentions (showed by Heatmap on face) outperforms the non-SE version by focusing on emotionally salient features, making it more adept at distinguishing subtle differences in facial expressions.

Tech Stack

- Kaggle Notebook, Python, PyTorch, Deep Learning

- Techniques: EfficientNetB0 (CNN), SE Blocks (Attention Mechanism), Transfer Learning, Fine-tuning

- Process: Data preprocessing, Feature extraction, Model development and optimization