Create Your First Project

Start adding your projects to your portfolio. Click on "Manage Projects" to get started

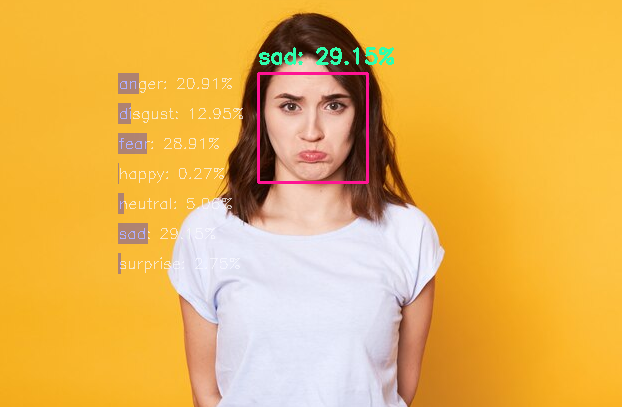

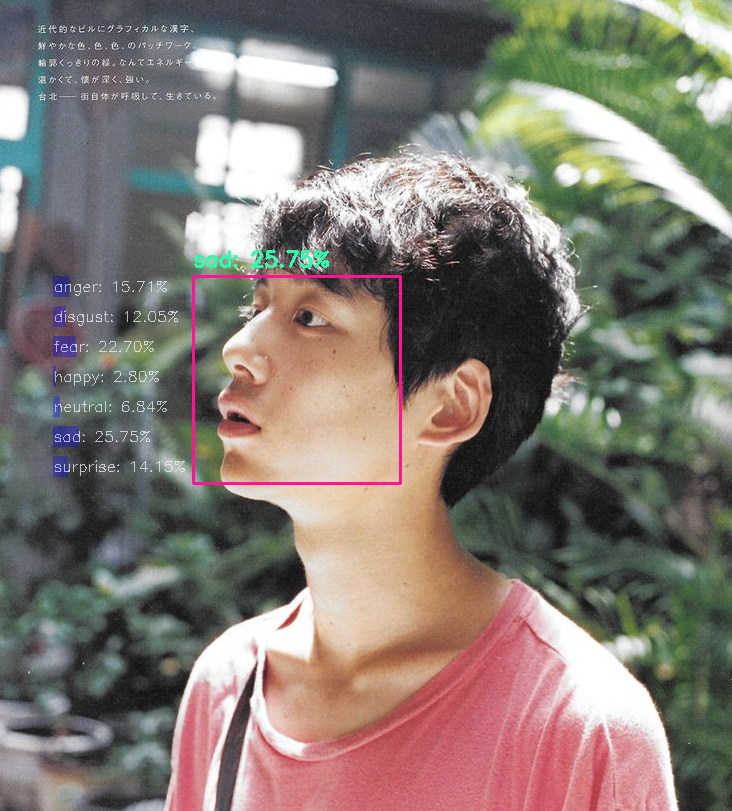

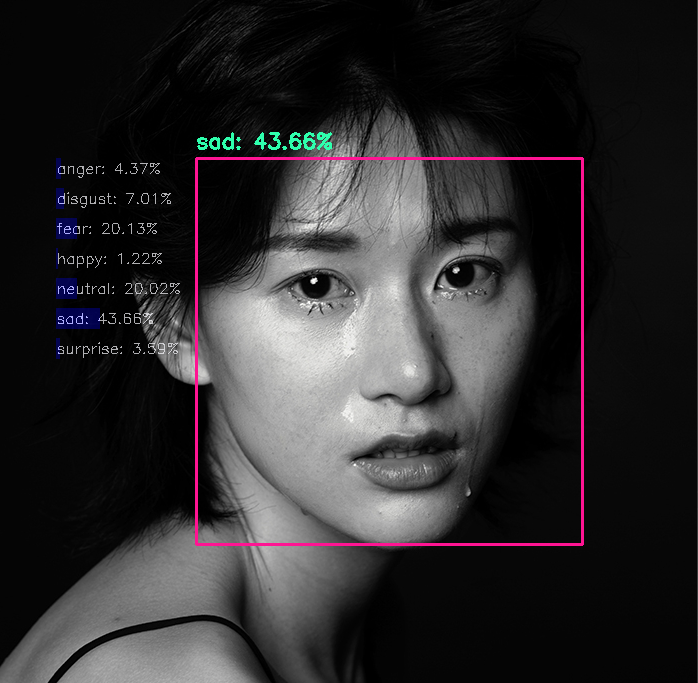

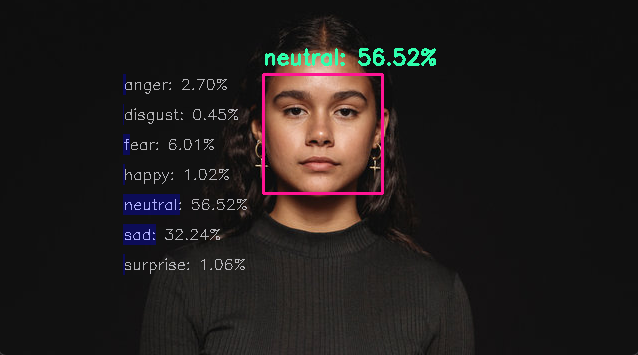

EmoVision: Real-Time Facial Emotion Detection with Transformers

Project type

Deep Learning, Computer Vision

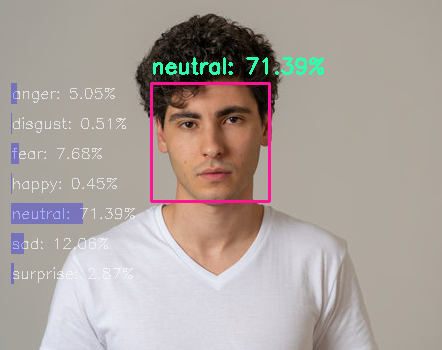

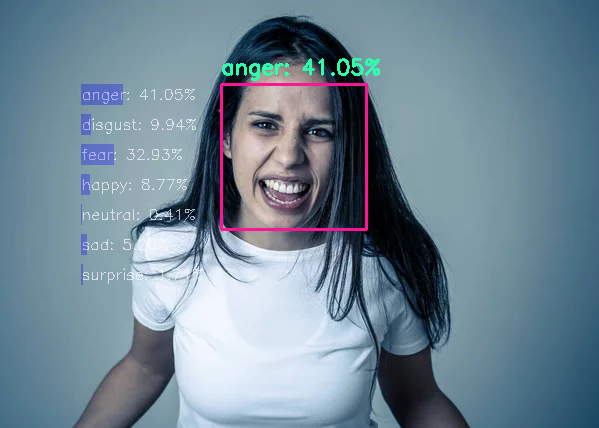

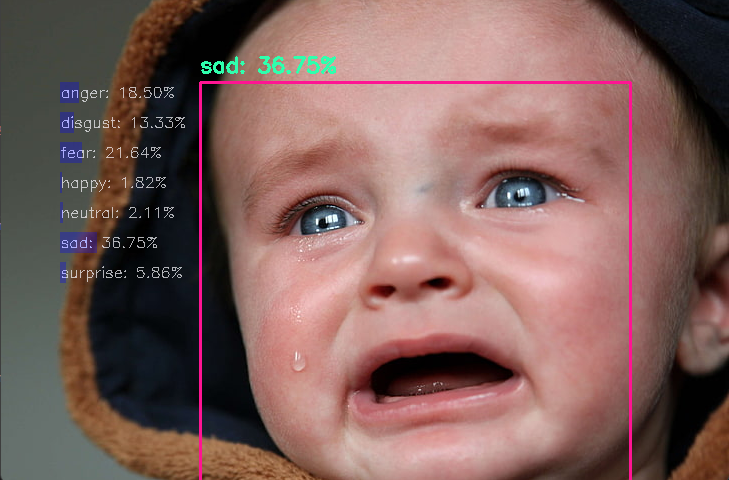

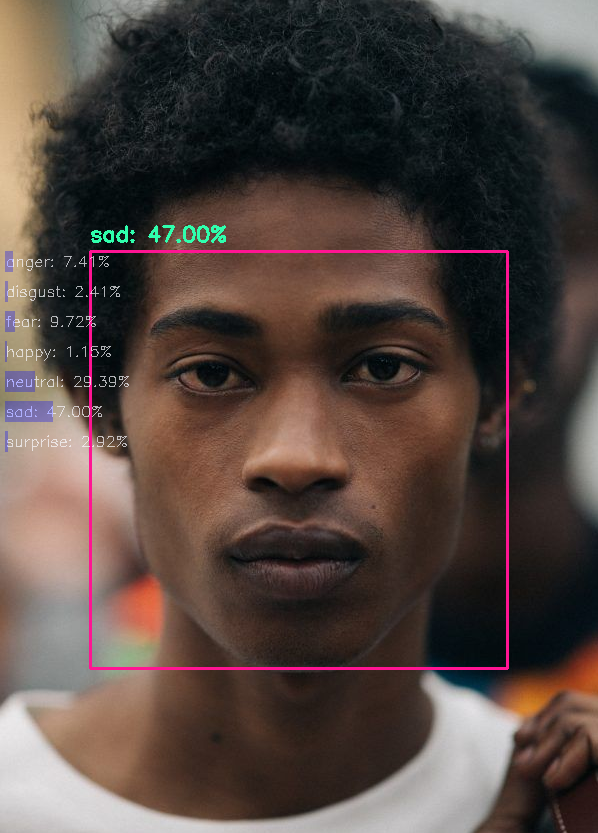

📹 EmoVision is a real-time facial emotion detection system utilizing Vision Transformer (ViT) architecture, capable of classifying emotions from both webcam feeds and uploaded images, trained on the FER-2013 dataset.

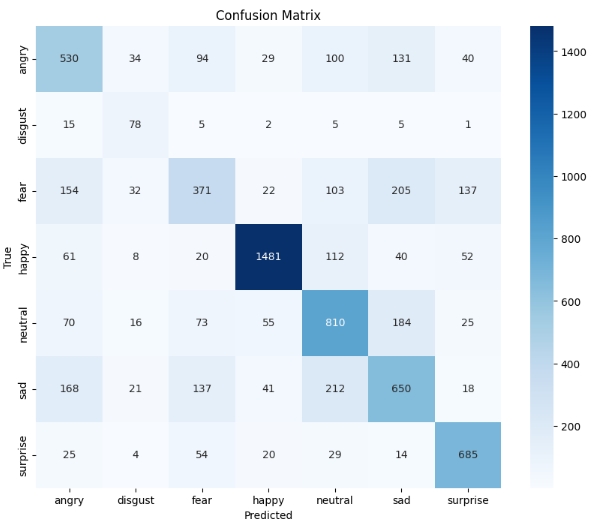

📹 I began with a pretrained ViT model (ImageNet 21K weights) and performed transfer learning by unfreezing the head, training for 20 epochs. This achieved a baseline validation accuracy of 48.69% on FER-2013, a challenging dataset with diverse real-world expressions.

📹 To improve performance, I fine-tuned the model by unfreezing 4 additional layers, adapting it to the facial emotion domain for 10 more epochs. This boosted the validation accuracy to 64.15%, competitive with state-of-the-art results (66–79% on PapersWithCode SOTA leaderboard), while maintaining strong generalization on unseen data, as demonstrated by successful real-time webcam detection and image uploads.

📹 Unlike traditional CNNs, which focus on local patches and may miss broader context, ViT uses self-attention mechanism to analyze the entire face at once, capturing global relationships between features like eyes and mouth for robust emotion detection.

Tech Stack:

- Python, PyTorch, Deep Learning, OpenCV, MediaPipe

- Techniques: Vision Transformer (ViT), Transfer Learning, Fine-tuning, Model Training